· Quality Assistance · 7 min read

Java AI Playground from Mark Winteringham's AG25 Session

Dive into the Java AI Agents Playground, my fork of Mark Winteringham's brilliant AI Assistant repo, designed for QA automation engineers eager to explore AI-driven testing. I have added features like Docker-based DevContainers, Logback logging, and Resilience4j retries. This project offers a hands-on platform to experiment with building AI-assisted test frameworks with AI agents in Java. Clone it, tweak it, and start mastering modern QA automation today!

As a QA automation architect with over 15 years of experience, I’m thrilled to share my fork of the AI Assistant repository marcandreuf/ag25-ai-assistant, forked from the talented Mark Winteringham repo at mwinteringham/ag25-ai-assistant. Thank you to Mark for building the foundation of this project and for his insightful session at the Automation Guild 2025 conference, where he explained the details with clarity and passion. This Java-based AI agent demo is a practical tool and a learning platform for QA engineers looking to design and implement test automation frameworks. In this post, I’ll walk you through the project’s purpose, technical features, and value as a template for your automation initiatives, explicitly tailored for QA automation professionals who want to start experimenting with AI Agents in JAVA.

Project Purpose: A Playground for QA Automation and AI Integration

The AI Assistant project simulates a room booking system powered by an AI agent that processes natural language inputs (e.g., “Create a single room with one bed for $100 per night”). While its core functionality demonstrates AI-driven intent recognition and database interaction, this is the tip of the iceberg of what is actually possible for assisted QA practices. The repository’s primary goals are:

- Learning Platform: Provide a hands-on environment to explore AI agent behaviour, test automation, and framework design in Java.

- Test Framework Template: This template offers a reusable blueprint for building robust, maintainable automation frameworks with features like logging, retries, and test isolation.

- Experimentation Hub: This hub will enable QA engineers to experiment with AI-driven test scenarios, such as validating semantic request handling by large language models (LLMs).

These two reports are good starting points for QA engineers to practise skills like unit testing, integration testing, and resilience testing while also exploring modern development practices like containerized environments.

Technical Features: Built for QA Automation Excellence

The repository incorporates several features. Below, I’ll summarise the key technical components and explain how they support testing and framework development.

1. DevContainer Setup with Docker Compose

What It Is: A .devcontainer configuration that spins up a consistent, isolated development environment using Docker Compose.

QA Value:

- It ensures reproducibility across machines, eliminating “it works on my machine” issues—a common pain point in test automation.

- It pre-installs Git, Java, and development tools so you can focus on writing tests instead of configuring environments.

- Simplifies CI/CD integration by mirroring production-like setups, which is critical for reliable test execution.

Use Case: Run the project in VS Code or IntelliJ with zero setup hassle and execute unit or integration tests in the same environment.

2. Database Reset for Test Isolation

What It Is: A @BeforeEach hook in the DataQueryTest suite that resets the database before each unit test.

QA Value:

- Guarantees test independence, preventing flaky tests caused by leftover data—a cornerstone of reliable automation.

- Allows tests to run in any order, simplifying test suite maintenance.

Use Case: Study the reset logic to implement similar strategies in your projects, ensuring clean test environments.

3. Structured Logging with Logback

What It Is: Integration of Logback as the logging provider, configured via logback.xml for readable, structured logs.

QA Value:

- Enhances test debugging by providing clear, formatted logs—essential for diagnosing failures in complex test suites.

- It serves as a model for adding logging to your automation frameworks, improving traceability.

- Supports log analysis for identifying patterns in test failures or performance issues.

Use Case: Analyze logs to troubleshoot AI agent behaviour or extend logging for custom test reporting.

4. Resilience4j for Retry Logic

What It Is: Retry mechanisms using Resilience4j to handle transient failures, such as rate-limiting errors from external APIs, especially for testing with free models with low rate limits. It takes more time to run, but at least it is still Free for learning and small experiments.

QA Value:

- Models robust error handling, a critical feature for automation frameworks dealing with flaky APIs or services.

- This includes fallback strategies and teaching QA engineers to maintain test stability under failure conditions.

- Encourages experimentation with resilience patterns, like circuit breakers or timeouts, in test automation.

Use Case: Adapt the retry logic for your API tests to reduce false negatives and improve test reliability.

5. Expanded AI Tools for Semantic Testing

What It Is: Additional tool methods (showRooms, showBookings) to test the AI’s ability to handle semantically similar requests.

QA Value:

- Provides a playground for testing AI-driven applications, a growing area in QA automation.

- Enables validation of LLM behaviour under varied inputs, simulating real-world user interactions.

- Demonstrates how to write tests for non-deterministic systems, a unique challenge in AI testing.

Use Case: Create test cases to verify the AI correctly distinguishes between “list rooms” and “show bookings,” refining your approach to testing intent recognition.

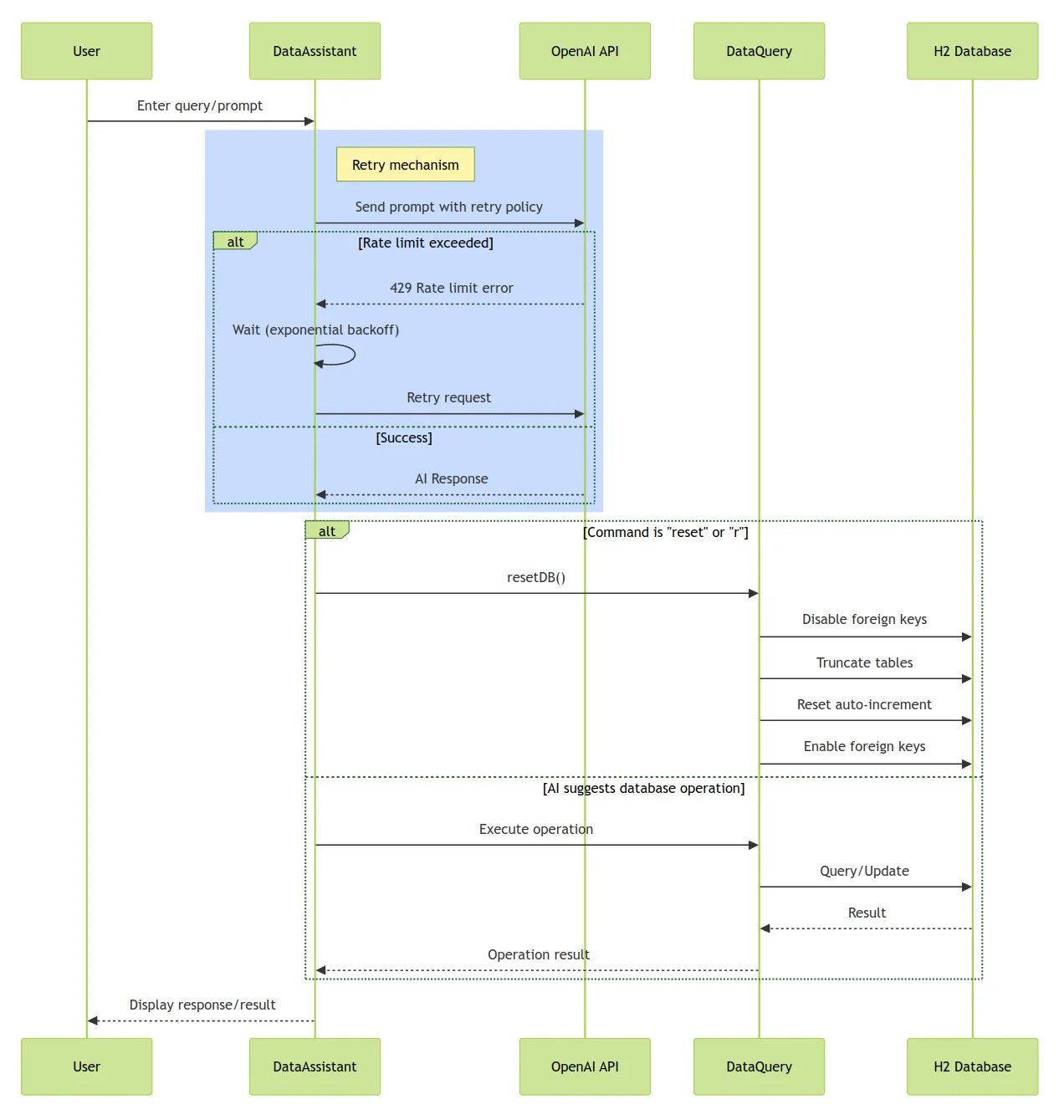

6. Sequence Diagram for Architecture Clarity

What It Is: A sequence diagram illustrating the AI Assistant’s workflow.

QA Value:

- Clarifies the system’s architecture, helping QA engineers map test coverage to specific components (e.g., AI processing, database interaction).

- It is a reference for designing visual documentation in automation projects and improving stakeholder communication.

Use Case: Use the diagram to identify test scenarios, such as edge cases in parameter extraction or database failures.

How QA Engineers Can Use This Project

This repository is designed to be both a learning tool and a practical template. Here’s how you can leverage it:

Clone and Experiment:

- Follow the setup instructions in the README to run the project locally.

- Write new unit tests for the AI tools or extend the database reset logic to handle more complex scenarios.

- Example: Add a test to verify the AI handles invalid inputs (e.g., “Create a room with -1 beds”).

Study Framework Design:

- Analyze the

DataQueryTestsuite to learn how to structure unit tests with JUnit. - Review the Resilience4j configuration to implement similar fault tolerance in your frameworks.

- Example: Adapt the retry logic for a Selenium-based UI test suite to handle network timeouts.

- Analyze the

Test AI Behavior:

- Use the expanded AI tools to create test cases that challenge the LLM’s intent recognition.

- Example: Test how the AI responds to ambiguous commands like “Show me everything” to uncover edge cases.

Integrate with CI/CD:

- Use the DevContainer setup as a blueprint for containerized test execution in pipelines.

- Example: Configure GitHub Actions to run the test suite in a Docker container, ensuring consistent results.

Additional Steps for QA Automation Experts

To make this project even more valuable, I recommend the following steps for deeper analysis and customization:

Analyze Test Execution Logs:

- Dive into the Logback-generated logs to identify patterns in AI agent performance or test failures.

- Add custom log levels (e.g., TRACE) to capture detailed debugging information during test runs.

- Example: Log the time taken for each AI processing step to optimize test execution speed.

Expand Test Coverage:

- Add integration tests to validate the workflow (user input → AI processing → database update).

- Example: Test the AI’s response to concurrent room creation requests to simulate real-world usage.

Why This Matters for QA Engineers

The AI Assistant repository isn’t just a demo; it’s a springboard for mastering modern QA automation. By combining AI-driven functionality with battle-tested automation practices, it offers a unique opportunity to:

- Build Robust Frameworks: Learn how to integrate logging, retries, and test isolation into your projects.

- Test Emerging Tech: Gain hands-on experience with AI-driven systems, an increasingly demanding skill.

- Streamline Development: Adopt containerized environments to simplify setup and ensure consistency.

Whether you’re a seasoned QA engineer or just starting, this project provides a practical, open-source foundation for sharpening your skills and delivering high-quality automation frameworks.

Get Started Now

Ready to dive in? Clone the repository and start exploring:

git clone https://github.com/marcandreuf/ag25-ai-assistant.git

cd ag25-ai-assistant

Follow the README’s setup steps to run the project in a DevContainer. Add your OpenAI API key. Check out the sequence diagram to understand the architecture. Then, experiment with the test suite, tweak the retry log, or add new AI tools to make it your own.

I’d love to hear your feedback! Open an issue on GitHub or comment below with your thoughts, suggestions, or customizations. Let’s build better automation frameworks together.

This post draws inspiration from my recent blog on integrating Mermaid diagrams (read it here), reflecting my commitment to clear, visual documentation in technical projects.

Keep testing, and keep learning!